Discover the InfiniBand and InfiniBand Switch

InfiniBand Technology Analysis

What is InfiniBand?

InfiniBand, referred to as IB, is a network communication standard designed for high-performance computing and data centers. Its core mission is to solve the delay bottleneck caused by the traditional Ethernet TCP/IP protocol stack and operating system overhead. Different from traditional networks, InfiniBand uses a point-to-point direct connection architecture and a channel-based switching network model to achieve direct data exchange between devices by removing the operating system intermediary layer.

InfiniBand technology has achieved three major technological breakthroughs:

- Adopting an application-centered communication model, applications can directly read remote memory through RDMA technology to achieve zero-copy data transmission

- Unify the interconnection structure and integrate storage I/O, network I/O and IPC (Inter Process Communication)

- Meet the explosive growth of data demand through continuously evolving bandwidth standards, such as from SDR 20Gbps to EDR 100Gbps and the current HDR 200Gbps and NDR 400Gbps

InfiniBand Core Advantages

The core advantages of InfiniBand are mainly reflected in the following points:

- Ultra-low latency: InfiniBand uses RDMA technology to compress latency to less than 0.6μs, which is a key indicator in the HPC field. Compared with 10 Gigabit Ethernet, it is more than 10 times lower, which is crucial for AI training and financial high-frequency trading. Its core technical principle lies in the kernel bypass mechanism. Data directly enters the application memory from the network card, eliminating the overhead of CPU interrupts and memory copying.

- Ultra-high throughput capability: InfiniBand’s single-port NDR 400Gb/s rate can support linear expansion to thousands of node clusters, meeting the communication requirements of kilo-card GPUs. The streamlined design of the protocol layer enables the payload ratio to exceed 98%.

- Elastic and scalable features: It supports Fat-Tree, Hypercube and other topologies to build 10,000-node clusters. The non-blocking switching architecture combines Credit-Based flow control and VL technology to fundamentally avoid network congestion.

| Comparison Criteria | InfiniBand | Traditional Ethernet |

| End-to-end latency | <0.6μs | >5μs |

| CPU utilization | <5% | 15%-30% |

InfiniBand Application Scenarios

InfiniBand is often used in the following fields through its low latency and high bandwidth characteristics:

- High-performance computing: More than 60% of the world’s top 500 supercomputers use InfiniBand to build interconnection networks. Typical cases include the Summit supercomputer at Oak Ridge National Laboratory in the United States. Its InfiniBand-based communication architecture supports scientific research tasks such as climate simulation and materials science that require trillions of data exchanges.

- Artificial intelligence and machine learning: In the training of large models such as GPT-4 and Stable Diffusion, thousands of GPUs are clustered through InfiniBand switches, and RDMA technology is used to achieve low-latency transmission of parameter synchronization, shortening model training time from weeks to days.

- Data center and storage network: Supports the NVMe-over-Fabrics protocol, which reduces the access latency of distributed storage systems to microscopic levels and improves IOPS performance by more than 5 times. At the same time, in cloud computing scenarios, InfiniBand provides a lossless network environment for virtual machine migration and real-time data analysis, meeting the stringent requirements of financial transactions, autonomous driving simulation and other scenarios.

InfiniBand Network Architecture Analysis

Core Component Composition

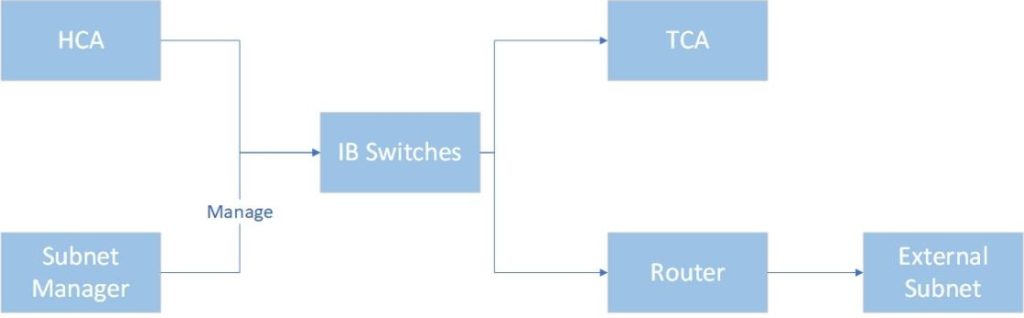

The operation of InfiniBand network mainly relies on the following four core components:

- Host channel adapter: Usually called HCA, it serves as the interface between the server and the IB network, undertakes protocol conversion tasks, and directly controls memory access through the RDMA engine.

- Target channel adapter: called TCA, a lightweight adapter designed specifically for storage devices such as SAN and NVMe arrays. It supports IB protocol to directly connect to the storage controller.

- InfiniBand core switch: adopts non-blocking Crossbar architecture, supports line-speed forwarding and adaptive flow control, has a wide range of port density coverage, integrates SHARP network computing engine, can offload aggregation operations such as All-Reduce, and accelerates AI training.

- InfiniBand subnet manager: Subnet Manager, referred to as SM, its core role is to dynamically allocate LIDs, build forwarding tables, monitor link status, and realize self-healing networks. Its router function can connect multiple IB subnets and implement cross-subnet routing through GID.

Common Topologies of InfiniBand

There are two common topologies in InfiniBand network solutions: Fat-Tree structure and Mesh structure.

- Fat-Tree Structure

Fat-Tree is a layered network architecture, usually divided into core layer, aggregation layer and edge layer. Its core design concept is to achieve non-blocking forwarding through the “Fat” link structure to ensure high throughput and low latency. In a typical three-layer Fat-Tree, edge layer switches are directly connected to servers or GPU nodes, aggregation layer switches are responsible for aggregating traffic, and core layer switches provide global high-speed interconnection.

- Mesh Structure

Mesh topology arranges nodes and switches in a regular two-dimensional or three-dimensional grid. Each switch usually connects 4 or 6 adjacent switches and simultaneously connects several computing nodes. Its structure is simple and highly scalable. When adding new nodes, it only needs to be expanded at the edge of the grid without large-scale adjustments to the overall architecture. However, since the communication path relies on adjacent jumps within the grid, communication between long-distance nodes may need to go through more switches, resulting in relatively high delays. It is more suitable for medium-to-large-scale scenarios with more regular communication patterns.

Analysis of RDMA Key Technologies

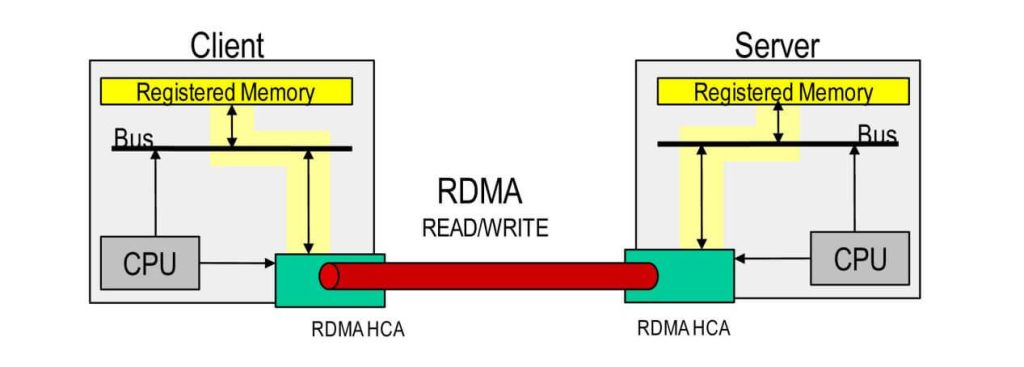

RDMA is the core technology that enables InfiniBand performance to surpass Ethernet. Its breakthroughs are mainly reflected in the following aspects:

- Zero-copy data transmission: Under traditional networks, data needs to be copied three times through user space, kernel space, and network card buffer. In RDMA mode, HCA directly accesses the peer memory, requiring only one DMA operation and skipping the OS kernel copy, which can significantly reduce CPU consumption and improve bandwidth utilization.

- Kernel bypass technology: User programs directly connect to HCA by calling Verbs API, eliminating system calls and context switches, without the operating system being involved in data transfer, which can significantly reduce data query and processing time.

- Transport layer hardware offloading: At the protocol layer, HCA hardware can be used to handle tasks such as flow control and CRC verification, releasing CPU computing power. Advanced capabilities include atomic operations and congestion control.

InfiniBand Switch

What Is an InfiniBand Switch?

In InfiniBand networks, switches are not only the hub for data flow, but also the core engine for achieving high-performance, low-latency communications. InfiniBand switches are core interconnect devices for building high-performance computing and AI clusters and are data link layer switching devices. Its core function is to realize efficient data forwarding between nodes within the InfiniBand subnet and make routing decisions through the globally unique identifier GUID. Compared with traditional Ethernet switches, InfiniBand switches adopt a centralized management architecture. The subnet manager SM uniformly allocates local identifiers LID and builds routing tables to achieve network self-healing and topology optimization. The physical form is divided into edge switches and core switches, which are used for non-blocking communication within the cabinet and large-scale networking across cabinets respectively.

Key Features of InfiniBand Switches

- Lossless Data Transmission Guaranteed

InfiniBand switches support CBFC, which uses a dynamic credit limit mechanism to ensure that the amount of data sent does not exceed the capacity of the receive buffer, thus avoiding packet loss from the source. In addition, the adaptive routing mechanism of InfiniBand switches can effectively deal with local congestion through packet-by-packet dynamic path selection and real-time optimization of paths combined with switch egress queue depth.

- Protocol Offloading and Computing Acceleration

InfiniBand switches support network computing engines and multi-protocol convergence technology, which can directly offload collective operations such as All-Reduce at the switch layer to reduce gradient synchronization traffic between GPUs. In addition, a single switch supports running InfiniBand and Ethernet protocols at the same time, realizing the unified carrying of computing network and storage network.

- High Reliability and Executive Rationality

InfiniBand switches support subnet manager agents to configure network policies, performance management agents to monitor throughput, and baseboard management agents (BMA) to ensure hardware health. Triple management agents ensure efficient management features of the network. In terms of reliability, InfiniBand switches support a partition isolation mechanism to achieve logical network isolation through partition keys to ensure that key business traffic is not interfered with.

How to Choose an InfiniBand Switch?

InfiniBand Switch Key Parameters

When purchasing an InfiniBand switch, the following parameters deserve special attention:

- Port rate and port density: The port rate of the InfiniBand switch directly determines the data transmission speed and switching capacity. Common rates include EDR 100G, HDR 200G, and NDR 400G. At the same time, the number of ports will also directly affect the scalability of the network. Common port numbers are 32, 40, 64, etc. High-density switches are suitable for large-scale cluster deployment.

- Management functions: The advanced management functions of InfiniBand switches help with network configuration and maintenance, such as SM subnet manager, which can manage the topology and routing of InfiniBand networks.

- Redundant design: For mission-critical applications, switches should have redundant power supplies, fans, and management modules to ensure high network availability.

- Compatibility and scalability: It is necessary to ensure the compatibility of existing equipment and optional switches, and whether it supports future network upgrade needs.

Factors Affecting InfiniBand Switch Price

When purchasing an InfiniBand switch, its price is usually affected by many factors. Generally speaking, NDR 400G models are more expensive than HDR 200G models. This is due to different core technologies, such as chip manufacturing process, optical engine cost, and cooling system differences, which will cause significant cost differences.

In terms of software licensing, original equipment usually includes basic SM services, but enterprise-level UFM functions usually require paid support from a separate device, and the cost differences for different UFM versions are also different.

In terms of procurement channels, generally speaking, the price of original equipment is relatively high. Through certified distributors, the procurement cost can be reduced by 15% to 20%. The price of some refurbished products can be even lower, almost 50% of that of new equipment, but they may face the risk of non-warranty after-sales.

If you need to get a preferential InfiniBand switch, you can contact LSOLINK sales for solution customization. LSOLINK can provide a complete set of InfiniBand equipment and matching optical modules or DAC products, which can reduce the purchase cost of your overall solution.

Frequently Asked Questions (FAQ)

Q: Does InfiniBand network support IP traffic?

A: Yes, InfiniBand networks support IP traffic through the IP over InfiniBand (IPoIB) protocol. IPoIB allows the transmission of traditional IP packets over InfiniBand networks, making InfiniBand networks compatible with existing Ethernet infrastructure. This is useful for users who need to run standard IP applications in high-performance networks.

Q: How do I tell if my system needs to be upgraded to InfiniBand network?

A: If your system has bottlenecks in data transmission, especially in application scenarios with extremely high bandwidth and latency requirements such as large-scale parallel computing, real-time data analysis, or high-frequency trading, upgrading to an InfiniBand network may be a wise choice. The high bandwidth and low latency provided by InfiniBand can significantly improve the overall performance of the system.

Q: Are InfiniBand switches complicated to deploy and maintain?

A: The deployment and maintenance of InfiniBand switches are relatively complex, mainly involving network topology design, subnet manager configuration, and compatibility with other network devices. Of course, choosing LSOLINK’s experienced technical support team can effectively reduce the complexity of deployment and maintenance.