NVIDIA H100 GPU Infiniband Solution

Overview:

The complete H100 Smart Computing Cluster contains five major parts: the arithmetic resource pool, the storage resource pool, the management resource pool, the core network and the security access network.

The LSOLINK H100 Infiniband solution is based on NVIDIA H100 GPUs, NVIDIA Quantum-2, NVIDIA Quantum platforms, and LSOLINK S5000M series switches to form a high-performance campus network comprising compute, storage, out-of-band, and in-band management networks. Performance Campus Network.

Computational Network (255+1 nodes as an example):

- Using a standard two-layer fat-tree architecture, the leaf and spine devices are NVIDIA MQM97XX series switches.

- One SU per 32 H100 GPU servers, carrying a maximum of 8 SU.

- 32 GPU servers with the same number of CX7 NICs in a single SU are connected to the same Leaf. e.g. the CX7 NICs corresponding to GPU card No. 1 of Server-001~Server-032 are all connected to the IB1/1/1~1/16/2 interfaces of the Leaf1 switch. A total of 8 leaf switches are deployed inside a single SU, which can satisfy the access of all the NICs corresponding to the 8 GPU cards of the servers in the SU.

- 1:1 network convergence ratio, i.e., the same number of upstream and downstream interfaces.

- The uplink interfaces of the Leaf switches are connected to each of the spine switches.

- SU8 is equipped with 31 H100 GPU servers and 2 UFM management servers. With more than 64 H100 GPU servers, the traditional subnet manager is already difficult to manage the whole infiniband network, so in order to manage the infiniband network more conveniently, all of them need to be equipped with UFM management.

| Product | Device Details | Descriptions | |

| Server | GPU H100 server | 8* H100 GPU | |

| 8* Single-port OSFP CX7 NIC (for compute network) | |||

| 2* Single-port 200G QSFP56 CX6 NIC (for storage network) | |||

| 1* Dual-port 25G SFP28 CX6 NIC (for management network) | |||

| UFM server | 4*single port OSFP CX7 NIC (for computing network) | ||

| 2* Single-port 200G QSFP56 CX6 NIC (for storage network) | |||

| 1* Dual-port 25G SFP28 CX6 NIC (for management network) | |||

| Switch | Spine Switch | MQM9790-NS2F | NVIDIA MQM9790-NS2F Mellanox Quantum™-2 NDR InfiniBand Switch,64-ports NDR, 32 OSFP ports, unmanaged, P2C airflow (forward) |

| Leaf Switch | MQM9790-NS2F | NVIDIA MQM9790-NS2F Mellanox Quantum™-2 NDR InfiniBand Switch,64-ports NDR, 32 OSFP ports, unmanaged, P2C airflow (forward) | |

| Optical Transceiver | leaf-spine links | NDR-OSFP-2SR4 | 800GBASE-2xSR4 OSFP NDR PAM4 850nm 50m DOM Dual MTP/MPO-12 APC MMF Transceiver Module,Finned Top |

| Server-leaf links | NDR-OSFP-2SR4 | 800GBASE-2xSR4 OSFP NDR PAM4 850nm 50m DOM Dual MTP/MPO-12 APC MMF Transceiver Module,Finned Top | |

| Server-leaf links | NDR-OSFP-SR4 | 400GBASE-SR4 OSFP NDR PAM4 850nm 50m DOM MTP/MPO-12 APC MMF Transceiver Module,Flat Top | |

| Fibre Optic Cable | leaf-spine links | OM4-B-MPO8FA-MPO8FA | MPO-8 (Female APC) to MPO-8 (Female APC) OM4 Multimode Elite Trunk Cable, 8 Fibers,Type B, Plenum (OFNP), Magenta |

| Server-leaf links | OM4-B-MPO8FA-MPO8FA | MPO-8 (Female APC) to MPO-8 (Female APC) OM4 Multimode Elite Trunk Cable, 8 Fibers,Type B, Plenum (OFNP), Magenta | |

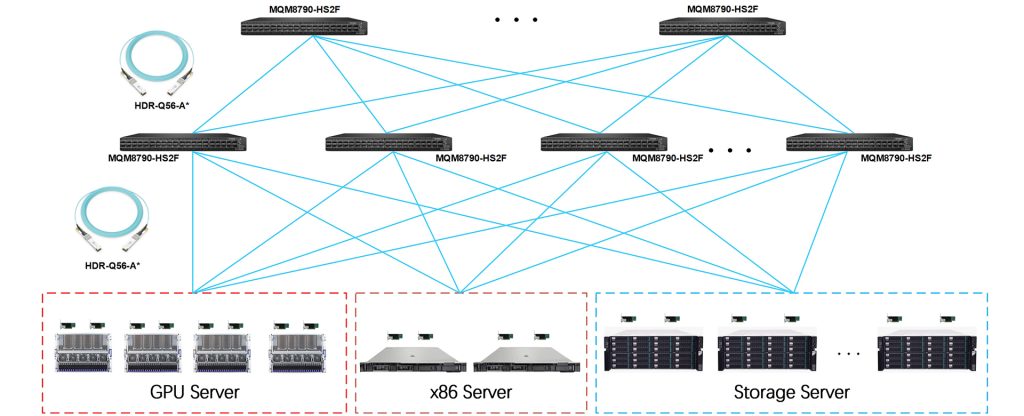

Storage Network (255+1 nodes as an example):

- The storage network is designed using the Fat-Tree architecture, and the leaf and spine devices are NVIDIA MQM87XX series switches.

- Deployment using a two- or three-tier architecture must meet the upstream and downstream traffic convergence ratio of 1:1 between different tiers.

- Adopting 200G QSFP56 direct-connect AOC as the transmission medium, its integrated design achieves plug-and-play through electric-optical integrated packaging, which reduces 80% of cabling complexity compared to the traditional optical module + fibre patch cord separation scheme.

| Product | Device Details | Descriptions | |

| Server | GPU H100 server | 8* H100 GPU | |

| 8* Single-port OSFP CX7 NIC (for compute network) | |||

| 2* Single-port 200G QSFP56 CX6 NIC (for storage network) | |||

| 1* Dual-port 25G SFP28 CX6 NIC (for management network) | |||

| UFM server | 4*single port OSFP CX7 NIC (for computing network) | ||

| 2* Single-port 200G QSFP56 CX6 NIC (for storage network) | |||

| 1* Dual-port 25G SFP28 CX6 NIC (for management network) | |||

| Storage Server | 2*Single-port 200G QSFP56 CX6 NIC (for storage network) | ||

| x86 Server | Single-port 200G QSFP56 CX6 NIC (for storage network) | ||

| Switch | Spine Switche | MQM8790-HS2F | NVIDIA MQM8700-HS2F Mellanox Quantum™ HDR InfiniBand Switch, 40 QSFP56 ports, 2 Power Supplies (AC), x86 dual core, standard depth, P2C airflow, Rail Kit |

| Leaf Switch | MQM8790-HS2F | NVIDIA MQM8700-HS2F Mellanox Quantum™ HDR InfiniBand Switch, 40 QSFP56 ports, 2 Power Supplies (AC), x86 dual core, standard depth, P2C airflow, Rail Kit | |

| AOC | leaf-spine links | HDR-Q56-A* | 1~100m QSFP56 HDR Active Optical Cable |

| Server-leaf links | HDR-Q56-A* | 1~100m QSFP56 HDR Active Optical Cable | |

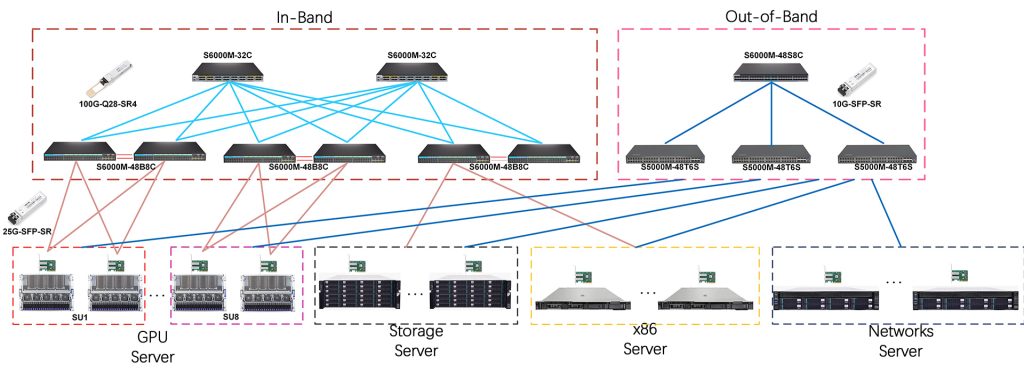

Management Network:

- Single GPU servers are typically deployed using dual-port 25G management NICs with Bond Mode 4 (LACP), and Leaf devices are networked using de-stacking or M-LAG architecture.

- The compute and storage networks are private networks, i.e., the compute and storage services of GPU servers are only dispatched within their respective networks, while the management and out-of-band services need to communicate with the external network, so a three-tier architecture is adopted for deployment, and the management NICs of the GPU servers in this region do not run RDMA in general, and thus there is no specific requirement for convergence ratio.

- The selection of management network equipment mainly depends on the server management network card rate, for example, the management network card rate of 25G, the Leaf equipment can choose the S6000M-48B8C model, if 10G, you can choose the S6000M-48S8C and other models.

| Product | Device Details | Descriptions | |

| Server | GPU H100 server | 8* H100 GPU | |

| 8* Single-port OSFP CX7 NIC (for compute network) | |||

| 2* Single-port 200G QSFP56 CX6 NIC (for storage network) | |||

| 1* Dual-port 25G SFP28 CX6 NIC (for management network) | |||

| UFM server | 4*single port OSFP CX7 NIC (for computing network) | ||

| 2* Single-port 200G QSFP56 CX6 NIC (for storage network) | |||

| 1* Dual-port 25G SFP28 CX6 NIC (for management network) | |||

| Storage Server | 1* Dual-port 25G SFP28 CX6 NIC (for management network) | ||

| x86 Server | 1* Dual-port 25G SFP28 CX6 NIC (for management network) | ||

| Switch | In-Band Management Switch | S6000M-48B8C | 48-Port Ethernet L3 Managed Switch, 48 x 25Gb SFP28, 8 x 100Gb QSFP28 |

| S6000M-32C | 32-Port Ethernet L3 Managed Switch, 32 x 100Gb QSFP28 | ||

| Out-of-Band Management Switch | S5000M-48T6S | 48-Port Gigabit Ethernet L3 Managed Switch, 48 x Gigabit RJ45, 6 x 10Gb SFP+ | |

| S6000M-48S8C | 48-Port Ethernet L3 Managed Switch, 48 x 10Gb SFP+, 8 x 100Gb QSFP28 | ||

| Optical Transceiver | 100G-Q28-SR4 | 100GBASE-SR4 QSFP28 100G 850nm 100m DOM MTP/MPO-12 UPC MMF Transceiver Module | |

| 25G-SFP-SR | 25GBASE-SR SFP28 25G 850nm 100m DOM LC MMF Transceiver Module | ||

| 10G-SFP-SR | 10GBASE-SR SFP+ 10G 850nm 300m DOM LC MMF Transceiver Module | ||

| Fibre Optic Cable | OM3-D-LCU-LCU | 1~100m LC UPC to LC UPC Duplex OM3 Multimode PVC (OFNR) 2.0mm Fiber Optic Patch Cable | |

| OM3-B-MPO8FU-MPO8FU | MPO-8 (Female UPC) to MPO-8 (Female UPC) OM3 Multimode Elite Trunk Cable, 8 Fibers, Type B, Plenum (OFNP), Aqua | ||

Programme Value:

- Centralised management

Unify the management of compute and storage networks in an H100 cluster with UFM. Monitor, troubleshoot, configure and optimise all aspects of the fabric through a single interface.UFM’s central control panel provides a single-view status view of the entire fabric. - Advanced traffic analysis

Unique traffic maps quickly identify traffic trends, traffic bottlenecks and congestion events across the fabric, enabling administrators to quickly and accurately identify and resolve issues. - Quickly locate and resolve problems

Comprehensive information from switches and hosts showing traffic problems such as errors and congestion. Information is presented in a concise manner in a unified dashboard and configurable monitoring sessions. Monitoring data can be associated with each job and client, and threshold-based alerts can be set. - The whole network adopts 1:1 network convergence ratio, real upstream and downstream lossless network.

- Dual Broadcom chips (Broadcom VSCEL+DSP)

Single product failure rate is less than 0.5%, BER before FEC (BER<1E-8), BER after FEC (BER is 15E-255, i.e., no BER), long term stable operation, no link flap. - Full ecological compatibility of NVIDIA devices

Deep coupling of Infiniband network protocols, all products have been tested with all versions of NVIDIA device firmware to ensure that optical transceivers are compatible with every version of NVIDIA device software. - 8-core high-density MPO low insertion loss patch cords minimise the problem of higher BER in the base link caused by optical coupling issues. A single NDR line is 4x100G PAM4 serdes, and often only 8 fibres are needed in the link, which does not result in fibre wastage compared to 12 cores.

If you are facing any of the following issues in your smart computing centre, please talk to our technical team one-on-one online to customise your dedicated smart computing centre solution:

The number of cluster GPU servers is non-standard (not a multiple of 32).

Cluster expansion.

Cluster fusion (A100+H100).

Other compute card clusters (A800, L40S, L20, 4090).