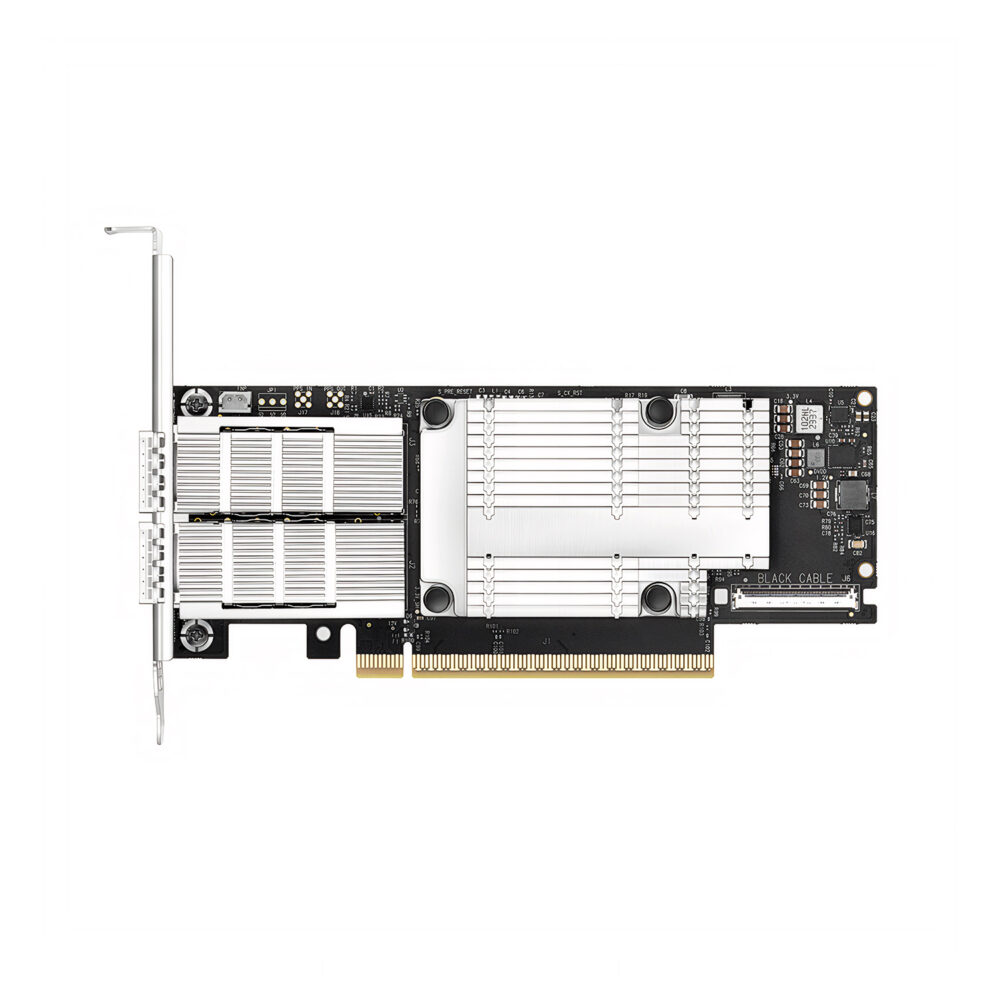

NVIDIA MCX755106AS-HEAT Mellanox ConnectX®-7 VPI Adapter Card 200GbE/NDR200, Dual-port QSFP112, PCIe 5.0 x16, with x16 PCIe Extension Option, Secure Boot, Tall & Short Bracket

#460303

SKU: MCX755106AS-HEAT

0 Reviews

Adapter Types:

-

Free shipping for orders over $99

-

Available for delivery within 2 days

-

3-year warranty

-

30-day return and refund

-

Lifetime technical support

Products Highlight

- Dual-Port QSFP112 for NDR InfiniBand & 200GbE Dual-Protocol

- Ultra-Low Latency with High Message Rate via RDMA

- Hardware-Accelerated XTS-AES Encryption (FIPS-Capable) with Block-Level Security

- PCIe 5.0 x16 Interface with Gen 4 Backward Compatibility

- End-to-End QoS & Congestion Control for Predictable Performance

- NVMe-over-Fabrics, In-Network Computing, and RoCE v2 Support

- Specifications

- Features

- Applications

- Testing

- Resources

- FAQs

- Reviews

Specifications

Product Certification

Features

The NVIDIA® ConnectX®-7 family of remote direct-memory access (RDMA) network adapters supports InfiniBand and Ethernet protocols and a range of speeds up to 400 gigabits per second (Gb/s). It enables a wide range of advanced, scalable, and secure networking solutions that address traditional enterprise needs up to the world’s most demanding AI, scientific computing, and hyperscale cloud data center workloads.

ConnectX-7 provides a broad set of software-defined, hardware-accelerated networking, storage, and security capabilities, which enable organizations to modernize and secure their IT infrastructures. ConnectX-7 also powers agile and high-performance solutions from edge to core data centers to clouds, all while enhancing network security and reducing total cost of ownership.

ConnectX-7 provides ultra-low latency, extreme throughput, and innovative NVIDIA In-Network Computing engines to deliver the acceleration, scalability, and feature-rich technology needed for today’s modern scientific computing workloads.

Applications

-

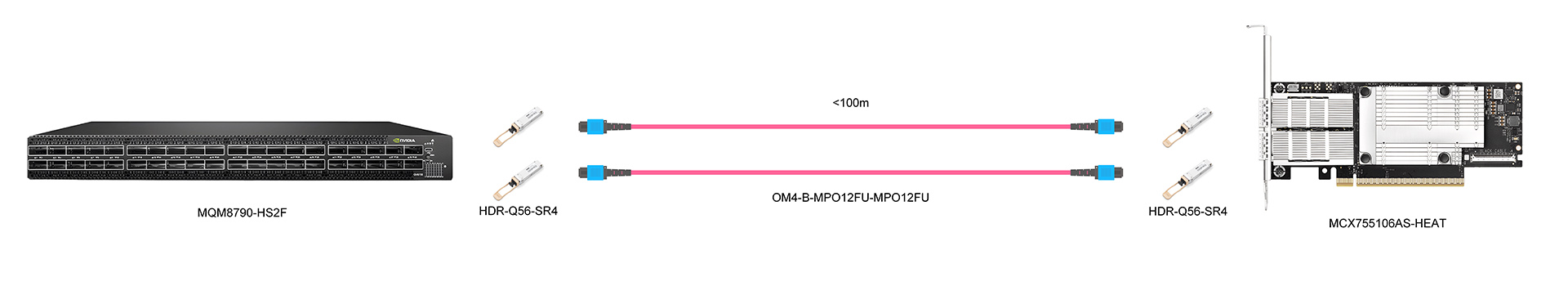

200G Switch to NIC Connection

-

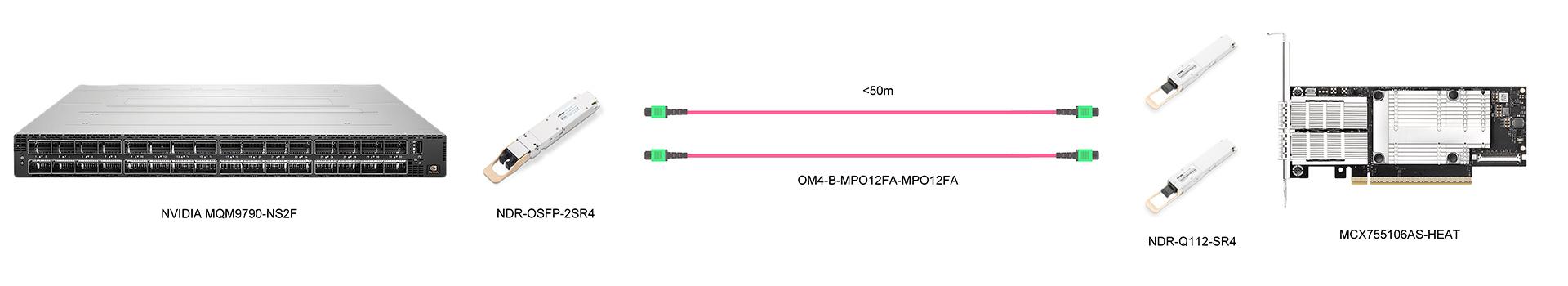

800G Switch to Dual Ports NIC Connection

200G Switch to NIC Connection

800G Switch to Dual Ports NIC Connection

Quality Testing Program

01. Product Hardware

02. Compatibility Testing

03. Quality Control

FAQs

What protocols and speeds does it support?

Can dual ports achieve 400Gb/s aggregated bandwidth?

What makes it suitable for AI cluster deployments?

What expansion options are available?